I’d hire an AI agent to do this GTM task …

Agentic AI workflows sound great, but how do you set them up to gain trust? Start with something you do already. Read: "Everything Starts Out Looking Like a Toy" #230

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? was the first post in the series.

This week’s toy: soon, your headphones will measure the ambient noise in your environment and create an adaptive sound field of quiet – a “sound bubble” of sorts – around your location. Conceptually this is similar to Apples “Spatial Audio” codec and promises to extend the sound envelope to a larger area. Edition 230 of this newsletter is here - it’s December 23, 2024.

If you have a comment or are interested in sponsoring, hit reply.

The Big Idea

A short long-form essay about data things

⚙️ I’d hire an AI agent to do this GTM task …

Agentic workflows seem to be everywhere these days. Kaladhar Voruganti defines them as semi-independent workers capable of action:

An AI workflow is agentic when it has the ability to act as an intelligent agent. This means that it can perceive the environment around it and take specific actions based on what it perceives. The fact that it has agency signifies that it doesn’t need to be prompted by a human in order to do this.

It’s the early days of this technology and it’s hard to identify jobs an AI agent will do well in your environment. Ideally, you’d want to test a few frequently occurring jobs to learn the thresholds for AI agents to produce quality work.

Identifying suitable tasks in the GTM environment

When would you hire an AI agent to improve your GTM setup?

I’d focus on a few aspects of tasks to identify a task an AI agent could complete:

Events that happen frequently, like contacts owned by a different owner than the account owner

The rules for fixing them are well-known, like all contacts for an account need to be owned by the account owner

The change is a “2-way door”, a reversible change with little consequence, like changing the ownership of a person

The task is completed today by a person who evaluates correctness, like checking that the right person owns a contact

A caveat to this list: these are all things you could solve with traditional automation in your GTM, whether with Salesforce Flows or other tools like Pipedream or Zapier. The difference is that those are closed questions where you have evaluated most outcomes.

We want to create building blocks for AI agents to get “one in a row” successes and make it possible to evaluate the work compared to doing it.

Building the first tasks

What would one of these tasks look like when we describe the agent, the expected task, and the expected outcome?

Agent Configuration:

Role: Sales Operations Expert

Goal: Ensure comprehensive account management by identifying accounts with fewer than two contacts and prompting account owners to add additional contacts.

Backstory: An experienced sales operations professional dedicated to maintaining the integrity and efficiency of the sales process, with a keen eye for detail and a proactive approach to data completeness.

Task Assignment:

Task: Monitor Account Contact Count and Notify Owners

Description: Regularly scan the CRM database to detect accounts linked to fewer than two contacts. Automatically send a notification to the account owner, informing them of the insufficient contact count and prompting the addition of more contacts.

Expected Output: Account owners are notified of accounts with insufficient contacts and take action to add additional contacts, ensuring comprehensive account management.

How do you start? Give 100 accounts to the AI agent and 100 to a person to complete the same tasks. After a few days, measure whether the AI agent does well enough to take more accounts.

We are not doing this to take work away from people. We’re doing it to remove boring, repetitive, and well-documented work so that it “just happens” as part of the expected rules of engagement and data quality in your GTM organization.

Compounding benefits from the established rules

Here’s the ultimate goal. If you can teach an agent (or a series of agents) how to apply, document, and reinforce your rules of engagement, you’ve created a resource to remove busy work from your plate.

This is an interesting and under-invested area of GTM and sales enablement software. We want to help sellers spend more time selling and less time being data entry clerks. Yet we’re also missing the opportunity to review and explain why we do the things we do at the point when a data anomaly or another change happens.

What would this look like in practice? It could start with a Slack message (or a voice message)

GTMBot, let me know if there are any accounts I need to fix

👾 GTMbot here! Let me check Salesforce... one sec...

Here are the accounts that need fixing:

1️⃣ Account: Acme Corp

• Issue: Only 1 contact listed

• Fix: Add at least one more contact to reduce risk.

2️⃣ Account: Innovate Solutions

• Issue: Missing contact details (phone/email)

• Fix: Update with complete and verified info.

3️⃣ Account: Greenfield Logistics

• Issue: No recent activity in 60+ days

• Fix: Reach out to re-engage.

Is this a real thing yet? Nope. But it easily could be given the ability of tooling today and it could get better over time. The key item to notice is how it enables sellers to self-serve while applying the expected rules of engagement and data quality.

A living system to document and reinforce ROE

What outcome do we want for a system like this? Automation to document and reinforce the rules of engagement,

This might sound like a silly goal until you think about the work that ops folks do on a daily basis. If you don’t automate the “easy stuff” and the rules that already exist, there’s less time and energy to work on strategic projects that impact the business in a meaningful way.

There will always be anomalies created when sellers and ops pros enter unexpected information into GTM tools. But a system that starts the process of fixing those proactively and documents the fix is a system with compounding benefits.

Agents won’t think for themselves, but they are good at summarizing, documenting, and recommending solutions to anomalies based on existing rules of engagement. (No, don’t let them do important things yet until you validate the output.)

What’s the takeaway? AI Agents have great promise, if you tell them what to do and validate that they do it. If you give them a series of tasks currently completed by your ops team, you can build automations that unlock more time for strategic thinking.

Links for Reading and Sharing

These are links that caught my 👀

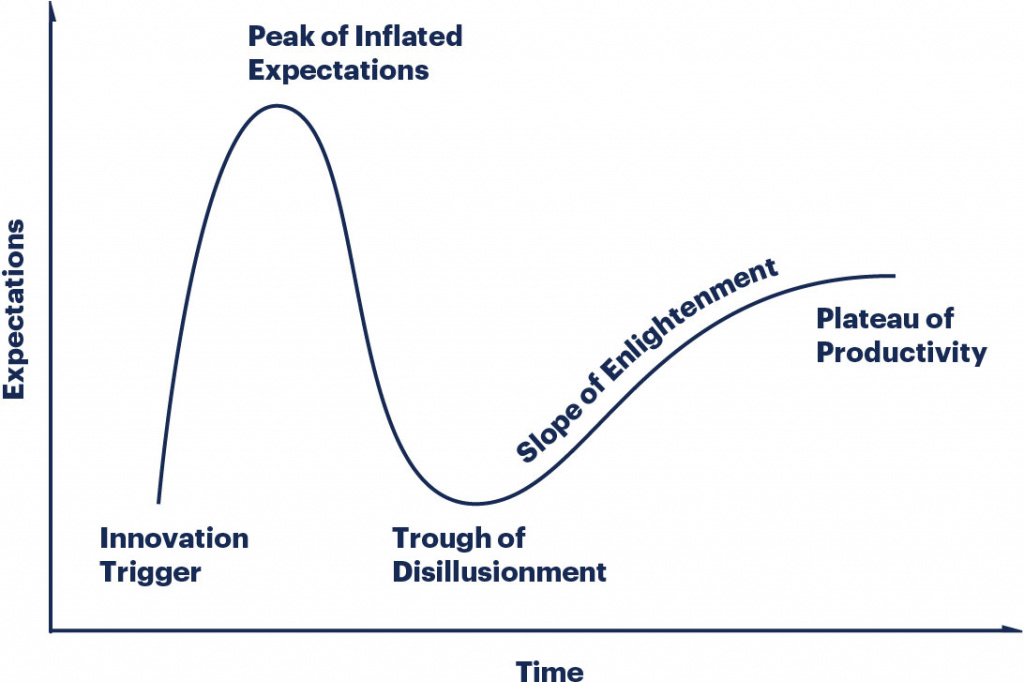

1/ AI Agent Roadmap - Insight Partners put out a market map of the AI agent landscape this week. When new technology emerges, getting up the S curve of technology adoption is hard without concrete use cases and repeatable results. The next step to making agentic architecture real and reliable is to place it in a regular workflow and measure it like any other technology. When agents win, it will be because they work better than other options.

2/ Yes, headlights are brighter - If you’ve wondered about headlight brightness while night driving … yes, they are brighter. It’s a race to improve visibility for drivers while not ruining the view for oncoming vehicles.

3/ Hamburglar would be proud - This account of security vulnerabilities in the McDonald’s delivery app in India is a master class in understanding and analyzing system design. Read this to think about mitigating risk and evaluating the environment where your apps are present.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon