Practice "Red Flag" moments in data operations

The U.S. Air Force invented the Red Flag exercise to keep pilots safe. What's the analog for operations to help practice things that go wrong? Read: "Everything Starts out Looking Like a Toy" #143

A HUGE thank you to our newsletter sponsor Pocus for your support.

If you're reading this but haven't subscribed, join our community of curious GTM and product leaders. If you’d like to sponsor the newsletter, reply to this email.

Brought to you by Pocus, a Revenue Data Platform built for go-to-market teams to analyze, visualize, and action data about their prospects and customers without needing engineers. Pocus helps companies like Miro, Webflow, Loom, and Superhuman save 10+ hours/week digging through data to surface millions in new revenue opportunities.

Hi, I’m Greg 👋! I write essays on product development, including system “handshakes”, the expectations for workflow, and the jobs we expect data to do. This started with What is Data Operations? It grew into Data & Ops, a fractional product team to create amazing UX and product experiences.

This week’s toy: cool spinning diagrams that are made only with CSS. I’m continually amazed by what you can do in a browser. We think of it as a flat piece of glass, and it’s actually a full computing environment - makes you think.

Edition 143 of this newsletter is here - it’s May 1, 2023.

The Big Idea

A short long-form essay about data things

⚙️ Practice “Red Flag” moments in data operations

During the Vietnam War, a crisis in lost planes during air-to-air combat prompted the U.S. Air Force to change the training methods used to acclimate new pilots to warfare. Analysis showed that when fighter pilots survive their first 10 missions in combat, they are much more likely to survive in combat.

The resulting exercise, called Red Flag, was created in 1975 to give pilots realistic combat experience in a controlled environment. It’s not the same as warfare, but it feels about as real as it gets. For an idea of how it feels, check out this 2020 documentary on Red Flag:

Why does Red Flag matter?

Red Flag matters for the people involved because it lets them practice incredibly complicated and dangerous things in an environment where failure does not always guarantee death as it would in real combat.

In Red Flag, personnel learn these critical lessons:

Everyone’s role is important. In combat, you will be under stress, and you need to know what you need to deliver to understand your role.

You are part of a team. If you watch the documentary above, you’ll hear the protagonist say that at the beginning of the exercise, he cared only about winning, and then realized that there was more to the practice than registering simulated kills. The key element was building teamwork and support.

Practice is necessary to deliver results. There are some things that are very difficult to do without trying. You can think about how to respond to a near-collision in flight, but until you feel it, it’s hypothetical. (There’s one in this documentary, and it hits hard emotionally.)

Mistakes are necessary to understand how it feels to resolve them. When you are “killed” in Red Flag, you get to fly home and think about what happened. That’s not a possible scenario in real combat.

Standard procedures will keep you safe. There’s a reason surgeons, pilots, and other professionals rely on checklists: they prevent error and raise accountability for everyone.

The Red Flag exercise enables everyone involved to try out these techniques in a situation that feels real.

What does this have to do with data operations?

At this point, you might be asking how the experience of some fighter pilots could possibly relate to the work we do managing data. (It’s a reasonable question.)

Take the simple example of sending an email to a group of people. What could possibly go wrong?

Copy mistakes could (and will) happen

It’s easy to cut and paste the copy into an email-sending machine. Whether you’re using Gmail, Outreach, or Marketo, there is usually a sample out there that someone made before you.

The copy could be wrong. I’m not talking about a missed space here or there; I’m referring to a prior version of a send that hasn’t yet been edited. A link could fail to resolve to the expected place. A UTM parameter might be missing. These are all easy mistakes to make.

And you don’t want the situation of mismatching custom fields or avoiding fall-back messages for those prospects that don’t have the data you need because the information wasn’t loaded correctly.

Recommended: complete a copy pass for every email, along with a sample email sent to a known address. Make sure to click all of the links.

Loading data can fail or go spectacularly wrong

Preparing a .csv file for a data load doesn’t always work. From mismatched column names to data type failures, sometimes prospect loads don’t go as planned.

When you’re in the business of updating email lists, a few things usually help a lot:

Place your original data in a “Raw Data” tab and don’t change it. Future you will thank you.

When you make changes, use lookup fields, or otherwise transform data, add an extra column in your spreadsheet with a formula to check your work against the current data (and at least one to check the data against the original)

Keep notes in your spreadsheet about what you did to change the data - it's often hard to remember the exact change and much faster to review your notes

Don’t forget to remove duplicate emails from your list - this is a built-in feature in most spreadsheets these days, so don’t forget it.

If you use filters to do an intermediate selection of data, make sure to remove your filters when creating load files to confirm you see all of the data.

Recommended: try not to re-sort your data more than is absolutely necessary – sorting combined with incomplete filtering can be a sad story – and include a column to check the data integrity of your updated data set against itself and the original.

Find the unusual mistakes before they happen

The most common email-sending mistakes that aren’t about content are about volume. And really these are about expectations that spam filters make about what looks like a spammy email.

In a marketing email, many of us know to avoid certain words in the subject line, too many images in the first email, or sending an email without an unsubscribe option. But we might not think about other signals of that email send.

Avoid sending out too many emails at one time. Avoid too many emails sent to the same domain. Avoid sending emails from different senders to the same email domain or account. (You can check these counts by using a pivot table of your spreadsheet on the email domain or account you’re sending to so that you have a good idea of the histogram of your send.)

Recommended: send emails at the cadence of a person. That means limiting your automated send to perhaps 200-250 emails in a day, and probably lower per account.

You get one chance to start an email send

When you press “send” on an email, it can be an anxiety-producing event. It doesn’t have to be that way.

If you’ve tested your data, validated it on at least a few records once it’s been loaded to your email marketing solution or your CRM, and sent a sample email to confirm the copy, you’ve eliminated a lot of the problems you’re likely to have.

I usually add at least two records to my email sends that use the Gmail feature of name+date@domain.com. The emails for these might look like greg+05012023@sampleemail.com where greg@sampleemail.com is an email address I can receive and is outside of the domain where I am sending email. (Gmail is good for this!)

The tactic for this send is to have one record that is a “happy path” record and one that is missing data. After I’ve validated those as test sends, I’ll use a real record as a test send to a known email.

Recommended: when you start a sequence of emails, limit the send to 25-30 records at first. Then check what was sent. If there’s an unlikely mistake that happened, you can limit the exposure before updating.

Applying these lessons to ops

What are lessons from Red Flag that need to be applied to ops? Here are a few of the things I’ve learned to make operational processes run better.

Have a standard procedure for anything you do more than a few times. Write it out in a way that anyone can understand.

Document your choices and what you do. This looks like a decision log that helps future you remember what happened.

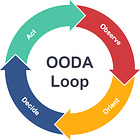

Use tools like the OODA loop (Observe, Orient, Decide, Act) to respond to unexpected outcomes. It’s a bit meta and this is a process for handling changes in process.

These are on my list - create your own to customize your experience for the ops situation you find yourself resolving.

What’s the takeaway? You don’t have to be a fighter pilot to appreciate the benefit of practicing items that might go wrong. Use a checklist to make sure that you are following the procedure you created when you were not under pressure to make sure you perform better under pressure.

Links for Reading and Sharing

These are links that caught my 👀

1/ How good are your GPT prompts? - Are you “prompt engineering” or writing some prompts you hope will work out with AI? Mitchell Hashimoto helps us understand the difference between these ideas and lays a foundation for measuring and improving prompts. Hint: think like an engineer and you are more likely to get a consistent output, understand the lineage of your response, and be better able to troubleshoot hallucinations.

2/ It’s time to build - I enjoyed this piece by Elad Gil on building a sustainable moat for a startup. Simply put, at the beginning of a startup’s life there is very little competitive advantage in most businesses. It is the choices we make to build optionality over time that end up compounding. With Gil’s advice in mind, the choice to build a platform capability as a primitive ancestor to a feature is a powerful pull. And we have to balance that desire to solve every problem with “what can we ship today that will make a difference for a customer,” because there might not be a time when you can take advantage of the platform.

3/ To be terrific, you must be specific - Jonah Berger shares a fascinating story about the power of language and knowing when to use concrete examples. When you are talking about today, Berger relates, concrete language like “the lime green Nikes you ordered” works better than “expect your order to arrive within the expected period.” If you want to describe the future and your vision, abstract language can be quite helpful, and it’s always better in the present to be clear and specific.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

Want more essays? Read on Data Operations or other writings at gregmeyer.com.

Want to book a discovery call to talk about how we can work together?

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon