The Hidden Cost of Not Building Internal Tools

Your team is already building the tools they need. Are you helping them mold that approach into an efficient and delightful internal product? Read: "Everything Starts Out Looking Like a Toy" #247

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? was the first post in the series.

This week’s toy: something as simple as a font choice can make a profound difference in understanding. Check out this new font from Microsoft Research dedicated to helping kids, early readers, and dyslexic folks to improve reading. We don’t spend enough time matching impact to typography.

Edition 247 of this newsletter is here - it’s April 21, 2025.

Thanks for reading! Let me know if there’s a topic you’d like me to cover.

The Big Idea

A short long-form essay about data things

⚙️ The Hidden Cost of Not Building Internal Tools

Why waiting for perfect software is slowing your team down

You've seen this movie.

Last quarter, Sarah's RevOps team at Acme Corp hit a wall. Their CRM's reporting module couldn't handle the new custom fields they needed for their enterprise sales pipeline. The vendor promised a fix “in the next release.” Meanwhile, sales reps manually copied data between spreadsheets, spending 3 hours per week on workarounds.

Across 50 reps, that's 150 hours per week of lost productivity. At $50/hour, that's $7,500 weekly (or $195,000 annually) in wasted time. All for a problem their data analyst solved in a few hours with a Python script to automatically backfill the data into their data warehouse. Instead of doing the work directly in the CRM, they got a report with the right information in another place using the CRM data.

There was no official fix for the vendor not supporting the functionality the team needed. But someone on your team already built it. You found this experiment when you asked: “how did you solve it?” Too often, that answer lives in a spreadsheet, a Google Script, or some SQL code that only one person understands.

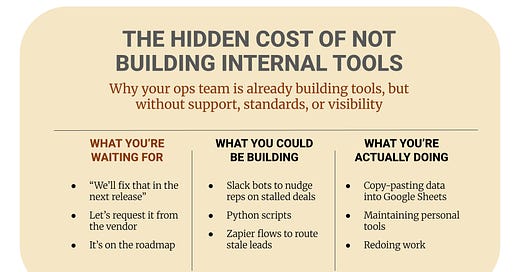

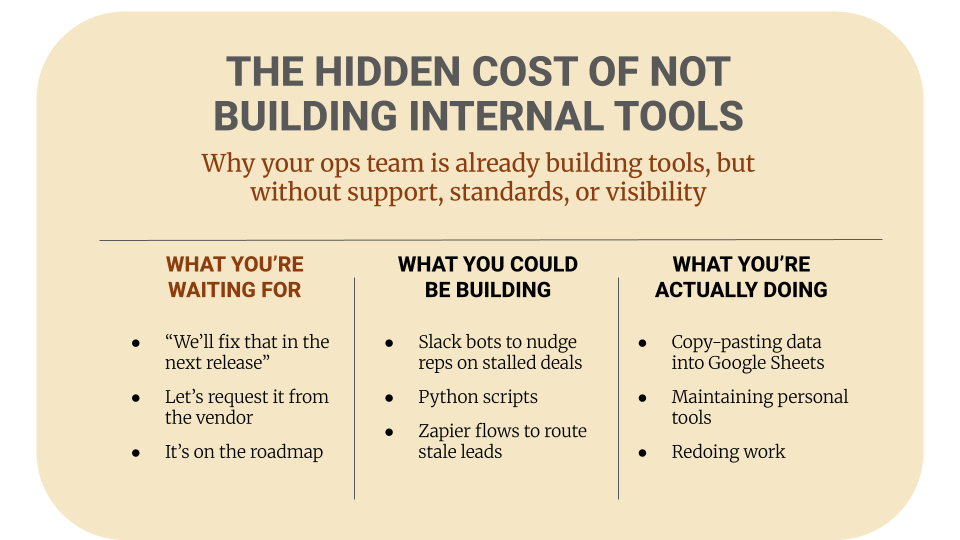

This is the real hidden cost of not officially building internal tools. You are already building tools but doing it unofficially, inefficiently, and invisibly.

The Problem: Internal Tooling by Accident

Some operations teams don't think of themselves as builders. They see internal tooling as “someone else's job”. That might mean outsourcing the job to product, engineering, or a vendor’s roadmap.

But the reality is that they have to do their job, whether the tools support them or not.

The best teams build prototypes to solve their roadblocks and share and enable each other

The average team continues doing things while blocked and waits for someone else to solve the problem

Your most valuable tool builders are already on your team and are building solutions that could help everyone. They need proper channels and standards to do their best work. While people are waiting, the building is happening. But it is fragmented, often undocumented, and fragile.

Pay attention! The people building these shadow tools aren't the problem. They are your champions and could (should) be building the tools of the future because they:

Understand the real pain points of the organization

Maintain the domain knowledge to solve these problems

Are motivated enough to build solutions

The challenge isn't to stop internal builders from building. It’s the process of giving them the right framework to build better.

Standardize efforts, document solutions, and give peeps enabling tools and you'll transform shadow IT into a powerful force for change.

Invisible Costs You're Already Paying

Here are some things you might not be considering with The Way Things Are Today:

Time tax on humans: the “just ten minutes” someone spends doing a daily manual data pull adds up fast. Multiply it across the team, and you're losing hours weekly.

Silent decision delays: when it’s hard to answer questions with data, decisions slow down or get made with gut feel instead of with an agreed-upon method.

Opportunity loss: if your best operators spend their time wrangling CSVs, they're not experimenting, optimizing, or scaling.

Shadow engineering: internal tools are being built today in places like Airtable, Sheets, or Notion. They often have little testing, scarce version control, and contested ownership.

How could we make this situation better? By modeling another approach.

What Internal Tooling Looks Like

Let’s set the stage for what we mean when we talk about internal tooling.

Internal tooling is not a fully-fledged product, yet. It might contain the pieces that you could string together and create a connected product surface, or it might seem discrete.

We're talking about tools like:

A script that alerts reps when deals are stuck in stage for 14+ days

A Slack bot that posts weekly quota attainment for each AE

A Chrome extension that fixes a clunky third-party interface

A Google Sheet that autoloads enrichment data via Clearbit API

These tools are small, specific, and fast. They solve actual pain, not theoretical process gaps.

When you amass a bunch of these tools around a set of related use cases, you’re getting a lot closer to a product.

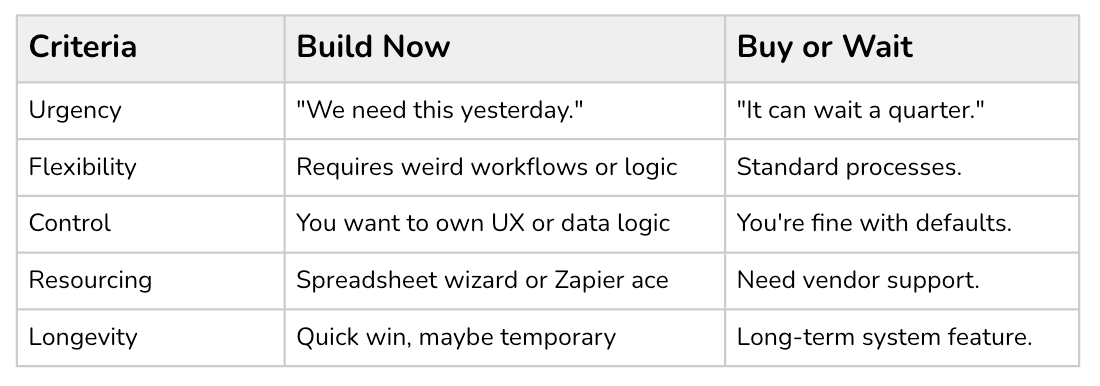

A Quick Framework for Internal Tooling

Here’s a matrix to consider when to build and when to buy or wait when you’re planning an internal tool.

Said another way, it helps you to measure the pain that’s happening in the organization right now, but it doesn’t tell you about the technical debt of maintaining an internal tool long term.

When you’re building an internal tool, it helps to consider when it would be time to sunset it. Whether the reason is that the tool is no longer needed or that it is superceded by a commercial tool (or an engineered one), start with that end in mind.

How to Start: A Friction Audit

Designing an internal tool looks a lot like the product development process in “paper prototype” phase.

The goal is to get to something you can test and use very quickly.

Here's a simple way to begin:

List your team's repetitive, annoying tasks.

"What are we copy-pasting every week?" is a great prompt.

Prototype a fix in under a day.

Zapier, Retool, Apps Script, dbt macros — use whatever your team already knows.

Name it and share it.

Every internal tool deserves a name and a doc. If it's good, it'll spread.Decide how to measure and when it will be successful.

Success might be as simple as awareness and use, or as specific as “it is used x times a day.”

Not sure where to start? Try one of these ideas or make up one of your own:

Lead Source Cleaner: Normalize junk values before they enter Salesforce

Slack Notifier: Alert reps if they haven't logged activity this week

Metric Drift Checker: Alert when pipeline coverage or win rate drops 10%

Auto-Router: Re-assign stale inbound leads using assignment rules

Outreach List Builder: Combine ICP filters + product usage + persona data into a ready-to-export list

Success looks like improving something incrementally in your environment that gets frequent use.

The Future of Internal Tooling

How do we evolve these “one-off” ideas into a true internal product? You need to answer larger questions like:

How to enable people to do what they want with the tools they already have?

If possible to synthesize a new way of working by combining efforts across tools, what would we do?

Is there a small bit of automation or scripting that could invisibly make this easier without people needing to know there is a "system"?

You don't need a full engineering team to start building internal tools.

Your operations team already has the domain knowledge; they need the right tools and permissions. The ROI on internal tooling isn't only in saved hours. It's in the insights and opportunities you capture while your competitors are still waiting for their SaaS provider to catch up.

What’s the takeaway? You’re already building internal tools — unofficially, inefficiently, and invisibly. Turn shadow IT into ops velocity with standardized, supported micro-tools.

Links for Reading and Sharing

These are links that caught my 👀

1/ Moving from clicks to… - Amelia Wattenberger wrote a delightful piece on improving the way we engage with UI and UX. The core message is to build for humans and their preferred engagement instead of forcing them into strained interactions. (Also, if you believe in Conway’s law, you’ll recognize that organizations that build human-friendly interfaces will be better places for humans to work.

2/ Building more reliable LLM apps… - If you’re building anything with AI, read 12 Factor Agents and see principles smart people use to guide their engineering and make LLM apps more reliable. These ideas on control flows and data security are good for other distributed services too (not just AI). h/t to Kyle Williams for the find.

3/ Teaching people how to leverage AI … - Once you are using AI tools, it becomes obvious where they work and where they are a bad choice or use case. One of the most obvious places where AI shines is in synthesizing a ton of information, like in news article searches. But how does that synthesis change the summary or outcome? I appreciate Mike Caulfield’s SIFT toolbox, a prompt to help you understand, interpret, and parse the news as seen by AI. You’ll learn a lot more about the news when approaching it with a critical thinking cap. This prompt can help.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon