We are all now Experience Engineers

When AI can build anything we can imagine, how do we build better, more consistent software that still feels human? Read: "Everything Starts Out Looking Like a Toy" #239

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? was the first post in the series.

This week’s toy: a bit of code that will turn any picture into a lower-resolution version using ASCII characters as the pixels. Yes, it’s super dumb and amazing, primarily because it will allow you to add pictures to your nerdy terminal IDEs. Edition 239 of this newsletter is here - it’s Feb 24, 2025.

Thanks for reading! Let me know if there’s a topic you’d like me to cover.

The Big Idea

A short long-form essay about data things

⚙️ We are all now Experience Engineers

Sahil Bloom writes in the parable of the Blind Men & the Elephant:

What would it take to change my mind? Knowing what it would take to change your mind on a topic is essential. If you realize that no amount of new information or data would cause you to change your mind, you have work to do. What do they know that I don't? When someone has an alternative perspective, always ask whether they might have some information or insight that you have yet to acquire. What data have they gathered that might inform their perspective, is it valuable, and how might you acquire it?

The programmer’s job faces a challenge with the advent of AI coding tools. It’s increasingly clear that using AI to assist with repetitive or structured tasks saves time and produces decent to great results. Yet AI tools don’t know everything and need human direction to deliver the results and quality we expect from a skilled operator.

The parable of the blind men and the elephant reminds us that perspective is everything.

We are all seeing different views of what AI is and what it could become.

What’s the programmer’s role when AI does some (or all) of the work that humans have traditionally completed?

Programming, coming to a tool near you

We have a new norm. At least some coding with AI is or will become expected for new and experienced programmers. These changes persist whether coders use AI for syntax completion or write wholesale new features with AI.

Amazing! You can even write in programming languages you don’t know yet, and a lot of the time the code will work.

How do you guarantee the work is done right even when it’s delegated to AI?You need a human.

Programmers are becoming experience designers who collaborate with AI.

No, I don’t mean they no longer need to know the logic of programming. In contrast, the logic of their programming has become more important, because there is a magical genie that will do … whatever they ask it to do.

The problems happen when you give a vague instruction to an AI bot and don’t test what it does, or when you don’t know enough about the sequence of events that should be happening that it’s difficult to understand when the AI has missed a critical step.

Maybe we should start thinking about software development as a co-design process. Humans provide the creativity and validate the logic, and the AI adds efficiency and helps with the mind-numbing tasks that often trip up developers (unit testing, anyone?)

What is an Experience Engineer?

The Experience Engineer combines the knowledge of “how to do it” with the sensibility of “what to do to achieve the design goal.” They are at home with Jobs to Be Done and also with calling APIs and traversing the response.

The closest analog we have to this today is a Growth Engineer or Front-End Developer, who both think about human psychology in addition to the scalability and feasibility of a feature.

Yes, “Experience Engineer” could just be a buzzword portmanteau I’m making up for a rhetorical flourish. I picked it because it emphasizes the intersection between design and engineering. The tools we’re building with AI are as much art as they are science right now.

AI is capable (or soon will be capable, with the right schema, context, and instructions) of doing most any digital task we can envision.

So what should AI be doing?

We don’t know yet, which is another argument for keeping a person in the loop when decisions need to be made.

We are the human in the loop

I believe that one enduring goal of software is to reduce complexity and develop better working code faster. But how is that possible with a human in the loop? People are … complicated.

Having a human in the loop is essential for creating great software. AI simplifies coding tasks and improves debugging, but it is objectively bad at a task like “make this more intuitive for a novice computer user.”

Unless there is a pattern library available, allowing AI to create a “mad-libs” style blind copy that reasonably approximates the choices made. Humans are much better than AI at making decisions for the “next step” when the goals are ambiguous and the code hasn’t been written yet.

AI does a decent job of copying things that exist. It makes it more important than ever for us to consider the systems we are building and to keep the human at the center of the experience through transparency.

What does “keeping the human at the center” mean in software?

Three things come to mind when working with AI tools:

disclosing in the code when AI is used in the process. This is easily done with build notes or mentioning in a commit that it was suggested by an AI along with a summary note to the prompt

when a decision point is reached, require a human to agree. Here’s one model for a decision log - your mileage may vary and you need to create the right approval process for your org

when it doesn’t make sense, don’t use AI. These tools are really great for pattern matching, classification, and mind-numbing repetition. As of now, they don’t “think.”

Yes: we can get into a philosophical argument of when chain of thought reasoning could possibly reach AGI, but I think that’s still a long way away.

So if you need to behave like a human to build a thing, rely on humans to give feedback.

Eventually, we will want AI-driven systems to act more “human” to mimic this pattern. What will this look like, and is it a reasonable goal?

How do we program systems to act more human

“Act more human” is an axiom for the trust that using the software provides a better outcome than not using it. What are some ways to encourage software to do this, especially as it becomes more autonomous?

One idea is to develop a code of ethics for software. I’m not an ethicist, so I don’t have an exhaustive list prepared.

It might start with Asimov’s robot rules, adding a maxim to avoid intentional deception of humans:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Part of the Experience Engineer’s job is to write test cases that block the ability to cause negative outcomes, or intentionally trigger them during debugging to test other connected systems like APIs. Humans are still undefeated at finding ways to break systems. (Note: this probably won’t last forever.)

Another skill we expect these engineers to learn is to develop a theory of mind about the way to plan their programming skills. Think of this as a critical thinking test where the best way to build includes an order of operations for approaching the problem.

Tools have already started to emerge that address the order of prompting as a way to improve the overall outcome. Maybe engineers should be reading philosophy and ethics texts as they think about customer journeys and flows.

It’s not too early to outline clear accountability for code produced with AI guidance. This means creating audits and debug to help us understand which part of the machine was created by AI and which decisions were human-augmented.

The role of the Experience Engineer will be a big part of that effort.

What’s the takeaway? Your responsibility as a developer (or someone who manages developers) is to identify and fix broken outcomes that don’t match the desired experience. The idea of an Experience Engineer highlights the need to include a human in the loop so that tools like AI will build a theory of mind for creating software influenced by skilled humans who keep the experience at the forefront.

Links for Reading and Sharing

These are links that caught my 👀

1/ Context, not just links - You might have noticed that on some subjects, prompting AI tools leads you to a similar answer almost every time. This article by Ben Evans nails the explanation by pointing out that you need to be an expert on a subject to determine whether the response you’re receiving is right. Is this important for general questions? Not really, but it’s a key point as we evaluate moving AI tools into more specific research. We need a knowledge graph that doesn’t depend only on link authority.

2/ What’s not included - Another thing that LLMs don’t do yet is consider the negative space outside of the information they are testing. Of course, you say - it’s not in their training data. And that’s the point, according to Ben Thompson. He writes, “[a]nyone who read the report that Deep Research generated would be given the illusion of knowledge, but would not know what they think they know.” A true research expert develops a theory for the result, pattern matching that will catch future results outside the current data.

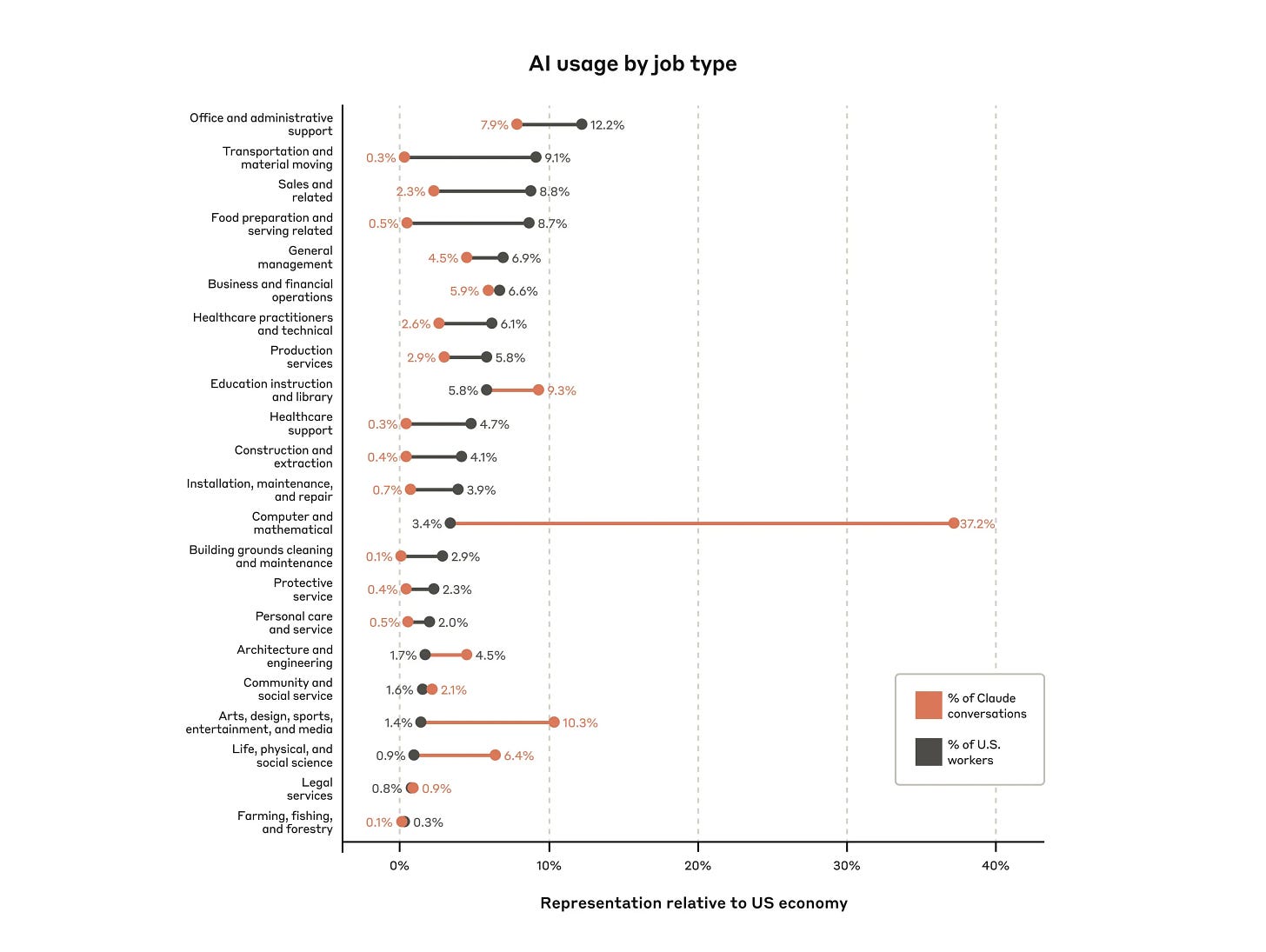

3/ Who’s using AI today? - Anthropic (makers of Claude) have published an index compiling statistics of who is using their tools so far. This graphic of job type segmentation is pretty interesting, not obvious reasons (computer scientists are using AI a lot) but for subtler insights. AI is good for classifying, sorting, and arranging information, skills aligning with transportation and logistics, construction, and healthcare. Match the outliers in this graph with the percentage of the economy and you have an interesting blueprint for where to build pickaxes for miners.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon