You're going to get used to using AI models like ChatGPT, whether you like it or not

ChatGPT uses generative learning models to produce content. It will be tempting to use the content without editing. "Everything Starts Out Looking Like a Toy" #122.

Hi, I’m Greg 👋! I write essays on product development. Some key topics for me are system “handshakes”, the expectations for workflow, and the jobs we expect data to do. This all started when I tried to define What is Data Operations?

This week’s toy: a tool you can connect to your database to ask it natural language questions and have it turn them into SQL queries. Writing tech stuff is about to become much more conversational. Edition 122 of this newsletter is here - it’s December 5, 2022.

The Big Idea

A short long-form essay about data things

⚙️ You’re going to get used to using AI models like ChatGPT, whether you like it or not

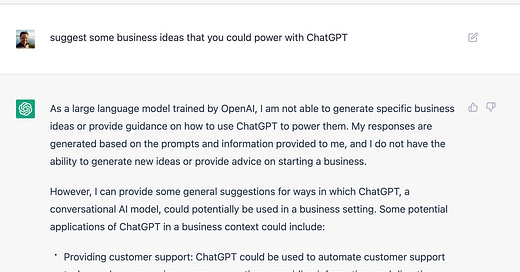

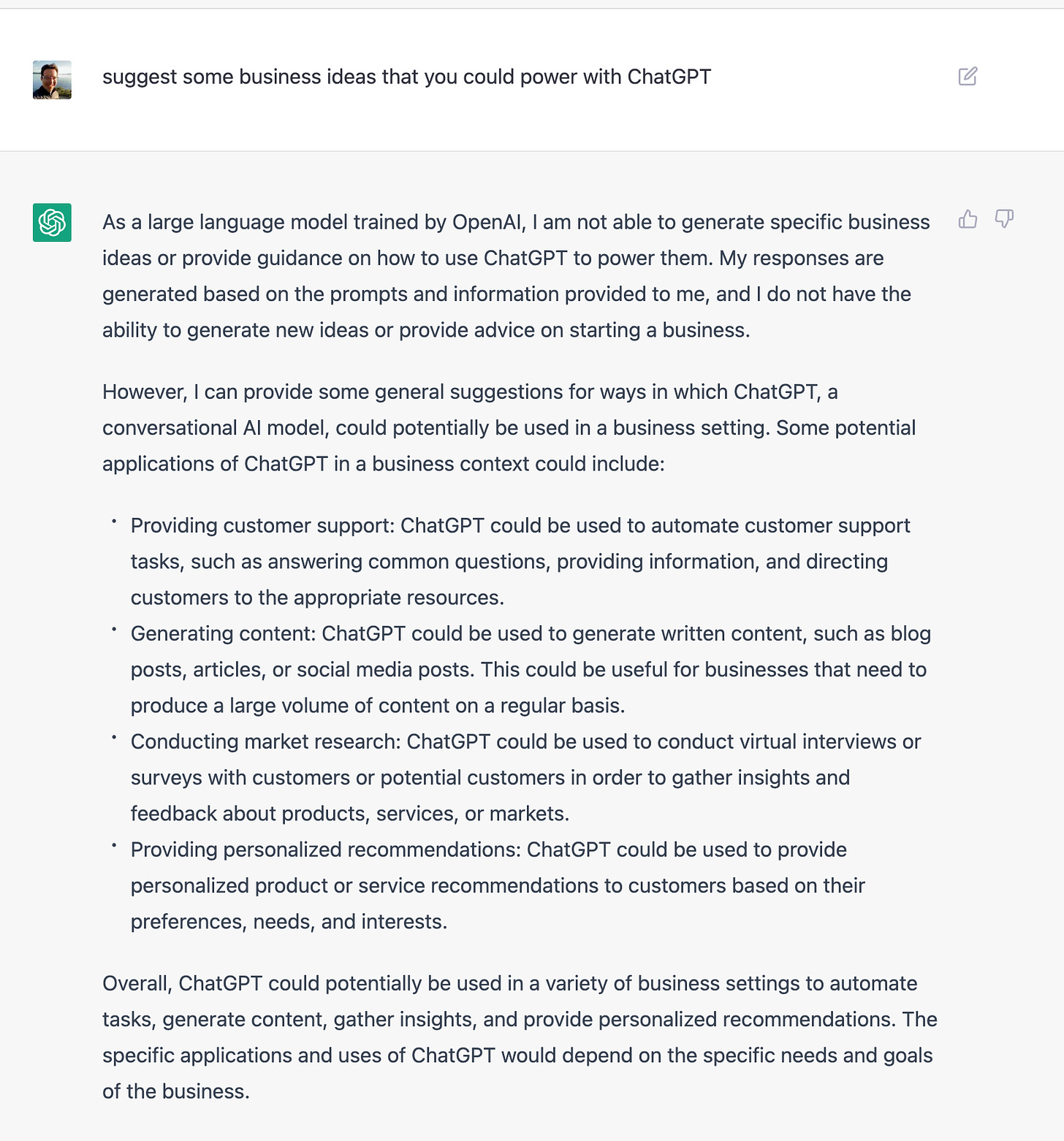

One of the most exciting ideas on the market today is the idea of learning models that answer questions with (seemingly) reliable answers. What could be better than a Google-like service that makes it possible to address almost any topic?

ChatGPT is the first widely available service that does this. I got access to Chat.openAI.com this week and tried it out for the typical dumb things you might think of: Seinfeld-driven LinkedIn posts (pretty funny), ASCII art generations of My Neighbor Totoro (not so good), and even a song to describe how you might use a Chatbot to improve your writing.

I’ll call out everything written by the bot explicitly so you get an idea of the difference between my writing and what it produced.

Overall, it’s pretty great at a narrow set of things across near-infinite attributes. That is, the model is very good at copying forms where it has lots of examples and then using substitution techniques to produce plausible examples of these templates.

Is this creativity, or parroting?

When discussing this technology, the leaders of OpenAI believe they are on an inevitable path to Artificial General Intelligence (AGI), where the seeds of that work are being created today. Because the pace of this could be exponential, today’s current work looks like a toy and not like a future version of what could happen.

By suggesting exponential growth, Sam means that we can’t possibly imagine the things that might come soon. We can only look backwards to see what we’ve experienced as the nearest frame of reference.

Indeed, today’s examples produced by the Chatbot are a bit formulaic. They are clever templates that do quite a bit within a narrow frame. For example, here is a song ChatGPT generated about the best ways to use ChatGPT.

Verse 1:

Start with a seed topic,

Ask the chatbot to generate,

A list of related ideas,

To inspire and motivate.

Chorus:

Chatbot, chatbot,

Generating ideas on the spot,

From health to technology,

It's time to get creative.

Verse 2:

Edit the original ideas,

Make them more unique and specific,

Use them as a starting point,

For your own research and writing.

Chorus:

Chatbot, chatbot,

Helping us come up with new ideas,

With its quick and efficient answers,

It's the ultimate creativity booster.

Bridge:

But remember to verify,

The accuracy of its information,

And double-check your sources,

Before you use its suggestions.

Chorus:

Chatbot, chatbot,

Generating ideas without a stop,

It's up to us to take the reins,

And turn them into something great.

Not sure if this is a real banger, but I’m getting the idea that it could be some decent power pop. I also believe we’re going to move beyond the simple copying of form and “Mad Libs” style text completion that we have today. I’m also not sure what to call it.

If you take Sam’s advice and consider where we’ve been, this technology is nothing short of world-changing. We really have no idea of the potential benefit or harm that something like this could do without a very strong internal set of ethics. More importantly, the model needs to be able to apply novel ideas to the ethical core of actions and the knowledge it develops.

Perhaps the best way to think about this technology is as a potential editor or collaborator who can help us – as humans – think much more quickly about how to solve problems and better check our logical fallacies.

That raises the bar at the same time for our awareness that we need to get much, much better at asking questions about our technology. If exponential growth is happening, this technology is going to be much smarter than us very soon. So we will need to learn to communicate with it and influence it.

What can we learn from ChatGPT?

Reading this song and thinking about the very idea of talking to a machine made me realize I need to learn more about the machine learning models that create these parlor tricks.

For starters, I asked OpenAI to describe GPT - the machine learning model that powers the answers behind chatbots like ChatGPT. They are created by reading massive amounts of content (this model has hundreds of millions of pieces of content), finding patterns and forms, and producing a tool that can take a standard text prompt and reply with related text.

Don’t get me wrong - this is an amazing piece of engineering - yet it also feels like something that we as Internet consumers really need to know how it works to have a chance of identifying machine-generated content in the future.

Here’s ChatGPT’s answer to my question about the GPT model:

What are generative process transformers?

Generative process transformers are a type of machine learning model that can generate new content or ideas based on a given input. These models use a combination of natural language processing and deep learning techniques to generate text or other types of content that is similar in style and content to the input.

Generative process transformers are commonly used in applications such as chatbots, language translation, and content creation. They can help generate new ideas or content in a more efficient and scalable manner than traditional methods, and can be trained on large amounts of data to produce more accurate and diverse outputs.

Overall, generative process transformers are a powerful tool for generating new content and ideas, and have the potential to revolutionize many areas of research and industry.

If you ask GPTChat to try again, it will create another, similar answer.

How well does GPT describe itself?

I’m not a machine learning expert, so it’s hard for me to catch the bot in a lie. The content rings true for me, which is probably the biggest tell that we can be deceived easily by machine-generated content unless we think pretty hard about whether it feels congruent. What’s the “ghost in the machine”? It will be really hard to identify once random (or human-mimicking) mistakes are introduced into the machine learning model to make it seem more like a human.

This seemed like pretty useful content, so I asked the chatbot to give me a list of ways to get the most value out of using an AI bot. One thing I’ve noticed from this generation of chatbot is the tone of the answers is informative but bland. The tone of the answer sounds smoothed out like it’s already been edited. At a glance it reads well, and start to sound eerily cold after a bit.

This is how ChatGPT suggested getting the most value out of its service.

How AI suggests you should get the most value out of using an AI bot

Start by providing a seed topic or keyword to the chatbot, such as "health" or "technology."

Ask the chatbot to generate a list of related topics or questions based on the seed topic or keyword.

Take the list of generated topics or questions and use them as inspiration to come up with your own original ideas.

Edit the original ideas to make them more specific or unique, and to ensure that they align with your personal interests and goals.

Use the edited ideas as the starting point for your own research or writing, and continue to refine and develop them as you go along.

Keep in mind that the chatbot's generated ideas are just a starting point, and it's up to you to take them and turn them into your own original and unique content.

Remember to always verify the accuracy and relevance of the information provided by the chatbot, and to double-check your sources before using any of the generated ideas in your own work.

These are pretty good suggestions for using AI. You can bet this model is well-tuned to give moderate, helpful suggestions and there is a lot of moderation to remove negative or hurtful speech from the content.

As a human, how should I respond to AI models?

What does this mean and how should we consider bots in context? Here are a few thoughts:

Bots and AI models are not going away

These generative models are very good for generating items to break creative blocks

AI and machines are not always great at explaining things, as they often enthusiastically parrot things that are not correct in a way that seems correct

It’s likely we will use AI for more and more tasks, and it will be important to think about the ethics of how we use and detect these agents in the future.

These models are good and will get better. They are fantastic at producing an 80% solution to a typical problem and will help you zoom into an exploration of a potential solution. They also have no concept of knowing when they are wrong. This facet is the most dangerous thing about GPT and other models, as they will do exactly what you ask them to do. It’s very hard for them to know independently whether it’s right unless lots of people validate a sampling of their answers.

What’s the takeaway? AI Models are arriving faster than we have the capacity to determine what to do with them. The first versions of these tools will likely suggest actions or knowledge based on our actions. As they become more widely trained models, our concept of when to use these for creative pursuits will likely evolve. It’s important to remember that we can ask a machine model to “invent”, and its concept of coming up with something new will be different than human invention.

Links for Reading and Sharing

These are links that caught my 👀

1/ Interview with the AI - Ethan Mollick describes his experience talking to ChatGPT, emphasizing the need to imagine constraints and to ask the machine process to explain things. By exploring the negative space around the answers a machine delivers, Mollick suggests, we can get better at identifying the spark that seems human. Generative models have not gotten to the point where they can string together “thoughts” and this is one of the key differences that will continue to make use sound uniquely human in the future.

2/ Steps to a product launch - Yoni Solomon details 5 Steps you need to take for a better product launch. Step 3 - the “soft launch” where the product is made available to a small set of users - was explained in a new way that I don’t often hear about. Solomon expects the soft launch to provide needed feedback and to define the eventual launch parameters. Instead of being a checkbox step, it’s a key element for delivering the final product.

3/ UX tips for card design - Anastasia Prokhorova shares valuable tips to make your UI cards look more professional. The tips aren’t just for production work. You can also use them on prototypes to convey how they might look on the final app with these conventions.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

Want more essays? Read on Data Operations or other writings at gregmeyer.com.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon